"I see plans within plans"

Actually, it was not planned to do git bisect and work on a regression. It’s classic — the plan I had at the start differs from what it became during the sprint running. I was working on a pf bug report and during its analysis I faced some strange behavior from DTrace with the following simple tracing scenario:

$ dtrace -n 'fbt:pf:hook_pf:entry'

$ pfctl -eI expected to see a single probe hit, but it was like stuck in a loop:

...

4 59199 hook_pf:entry

4 59199 hook_pf:entry

4 59199 hook_pf:entry

4 59199 hook_pf:entry

dtrace: 8893079 drops on CPU 4Well, as long as DTrace is one of my main tools, and I was in the seek mode for "problems to learn from", I decided to research this obstacle and fix it.

The time has come to automate

I’m not one of the DTrace authors or active maintainers to have some quick hypotheses popping up in my head of why and where something could go wrong. One of my best strategies here is to apply a binary search for the root cause commit. And hope that such commit is not a big change to analyze.

Presumably, I will need to do a lot of FreeBSD builds. I knew this day would come — when it’s required to add more automation to my so called "FreeBSD lab". This task has been postponed so far, and now I wish to have additional tools at my disposal:

-

BUILD — Easily launch full build (world + kernel) for a given commit with a single shell command.

-

NOTIFY — Get notified when a long job is finished.

-

INSTALL — Easily create test stand out of the build artifacts, with a single command.

I guess every OS developer has their own nifty and clever work environment with various automation. So, I continued to evolve mine. My modest tiny lab is based on qemu @ macOS and the good enough solution for these three wishes in my case is as follows.

build

The build goes in a separate build VM. It’s based on something stable like RELEASE. A separate /usr/src filesystem is used, so the root fs can be easily switched to another RELEASE, and src fs can also be re-used by another VM not to have multiple git repo clones. The result, /usr/obj, is collected in a separate fs — a new fs file is created for every build. This is the layout overview with some intuition of the sizes of the components:

install

The installation process can be done within any VM due to obj fs contains all the prerequisites inside: the usual /usr/obj content and the related sources copied from src fs (without .git repo itself). And the DESTDIR is the root fs of another VM — the test stand:

test

All the needed checks and plays happen in a separate test vm after the installation:

build and test in parallel

As long as every build creates a brand new obj fs which presents a semi-finished product, the installation & test process may happen in parallel with another build being in progress. The obj fs has a copy of the sources used to cook its object files, i.e. a consistent installation mechanism may take place using exactly the same origin:

The dwarves

This trivial solution can easily be automated with some shell script(s). My implementation provides the following interface to launch single or multiple builds (in sequence):

$ eitri baarch64/b4db386f9fa7 [ <build-vm>/<git-commit-ref> ... ]And the installation is ordered the following way:

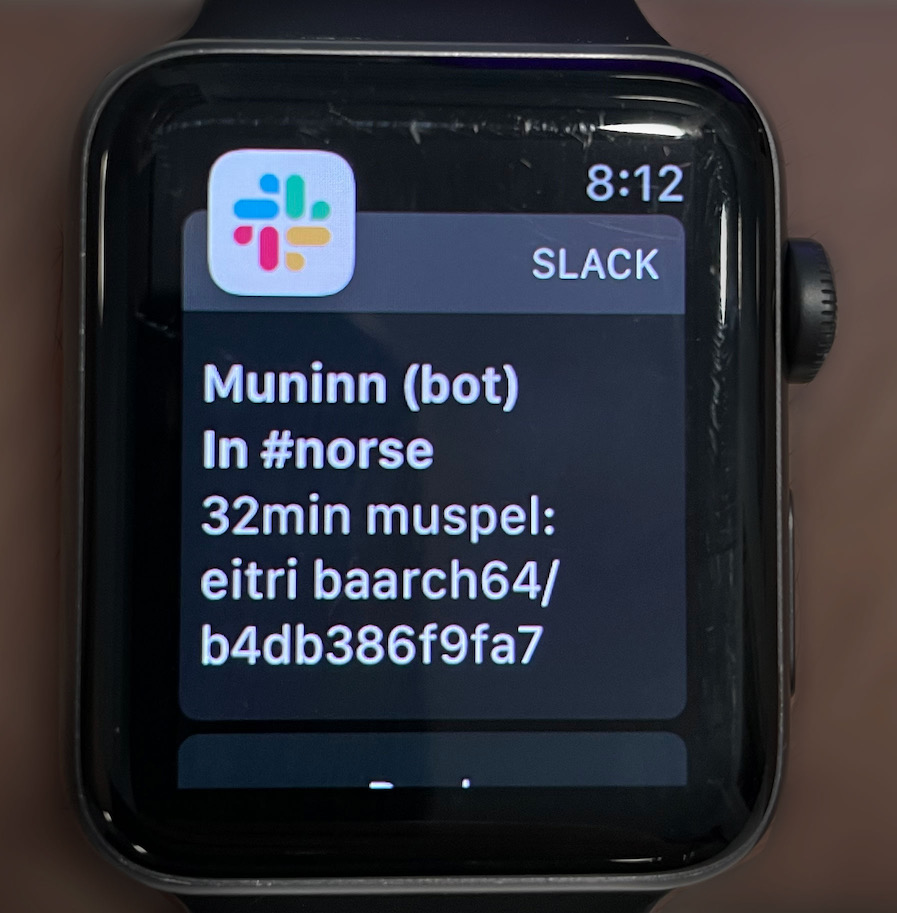

$ brokkr baarch64/b4db386f9fa7/taarch64Another script has been serving me for many years providing notification services via a personal Slack group. So, I can simply reuse it as is:

$ muninn eitri baarch64/b4db386f9fa7…to receive the respective build finish notification even if I’m away from the computers:

Are we there yet?

Okay, additional automation and separation of concerns save some administration time. But I’m not the lucky one to have resources for ultra fast builds. I managed to reduce my buildworld time from ~3 hrs down to 0.5 hrs, but it’s still too much if it’s multiplied by the git bisect steps.

To speed up the process I decided to apply situation-based tactics according to the following facts, assumptions, and the very first results:

-

I tested the latest release, 13.2, and as I expected it was a "good" one.

-

Hence, I had the assumption that this specific regression should not be very old, I started from several discrete points in the near past — I expected to find a "good" commit of CURRENT within the 2023 year.

-

And the first build turned out to be a "good" one, of May 2023.

-

Git bisect says that around 12 steps are expected between "good" in May 2023 and "bad" in Sep 2023. Well, 12*0.5 = 6 hrs of builds with periodic administration in between looks unattractive.

-

Having some knowledge of DTrace architecture I assumed that it’s most likely an issue on the kernel side. Thus, I could go up from May 2023 using the same world of this "good" commit and rebuild kernel only. And I will do the full build if I find it broken without the aligned world.

-

Luckily, my usual full kernel build is relatively quick — 3 min versus 30+ min with the previous setup. So, such an approach really saved a lot of time, and with the narrowing of the commit range the

META_MODEbased kernel builds began to take ~9 seconds.

Such way it was much faster than 6 hrs of 12 full builds plus manual administration and it required only two full builds: the May 2023 one to find the first "good" and the final verification of the discovered root cause commit.

And what about the regression itself?

The FreeBSD project keeps its reputation for high code standards and development best practices — I faced a small logical commit. Therefore, the best practice of as-small-as-possible-logical-commit yielded its dividends — it was a simple task to spot the defect without being a DTrace developer.

Copyright © Igor Ostapenko

(handmade content)

Post a comment